- RTX 4080 release date: November 16 2022

- Nvidia GeForce RTX 4080 (16GB) price: $1,199

- RTX 4080 12GB version ‘unlaunched’

- Built on Nvidia’s Ada Lovelace architecture and using TSMC’s N4 process

- No Founders Edition of the GeForce RTX 4080 (12GB)

Nvidia has announced that it will no longer be releasing the GeForce RTX 4080 12GB card, at least not in the expected state. That will leave the RTX 4080 16GB card as the first, and now potentially only one, of its name.

We evidently weren’t the only ones confused about the planned launch of two different cards, with wholly different GPUs, and completely different specs, but sharing the same name.

“The RTX 4080 12GB is a fantastic graphics card, but it’s not named right. Having two GPUs with the 4080 designation is confusing,” Nvidia says in a surprisingly concise blog post.

“So, we’re pressing the ‘unlaunch’ button on the 4080 12GB. The RTX 4080 16GB is amazing and on track to delight gamers everywhere on November 16th.”

The GeForce RTX 4090 was the first card from the RTX 40-series out of the door on October 12, and now we know it will be followed on November 16 with a single RTX 4080. You’ll find the full specs and details of all this GPUs below.

Release Date

The GeForce RTX 4080 is launching on November 16 this year, in one 16GB SKU.

You can sign up to be notified when the cards are available over on Nvidia’s site. Resellers are expected to offer a similar service—not quite pre-orders, allowing buyers to sign up for notifications as to when the cards arrive.

There’s nothing official from Nvidia on when the review embargo lifts for these cards, which isn’t too surprising given the lack of a release date, but given the RTX 4090’s launch it is likely that would be around November 15.

Price

When it comes to the thorny subject of price, the RTX 4080 makes for difficult reading. While the top-end, the GeForce RTX 4090, can naturally enjoy an elevated price point due to its flagship positioning, that’s not usually true when it comes to the 80-level cards.

The GeForce RTX 4080 (16GB) has a starting price of $1,199. For comparison, the GeForce RTX 3080 (10GB) launched at $699, making this $500 more expensive offering. Like the RTX 4090, this will be available as a Founders Edition card.

The GeForce RTX 4080 (12GB) had a starting price of $899. But with that card nixed we don’t know what that will mean for the pricing of any potential RTX 4070 or RTX 4070 Ti with a similar AD104 GPU. There does seem to be a big price void to fill below the two $1,200+ Ada graphics cards.

Performance

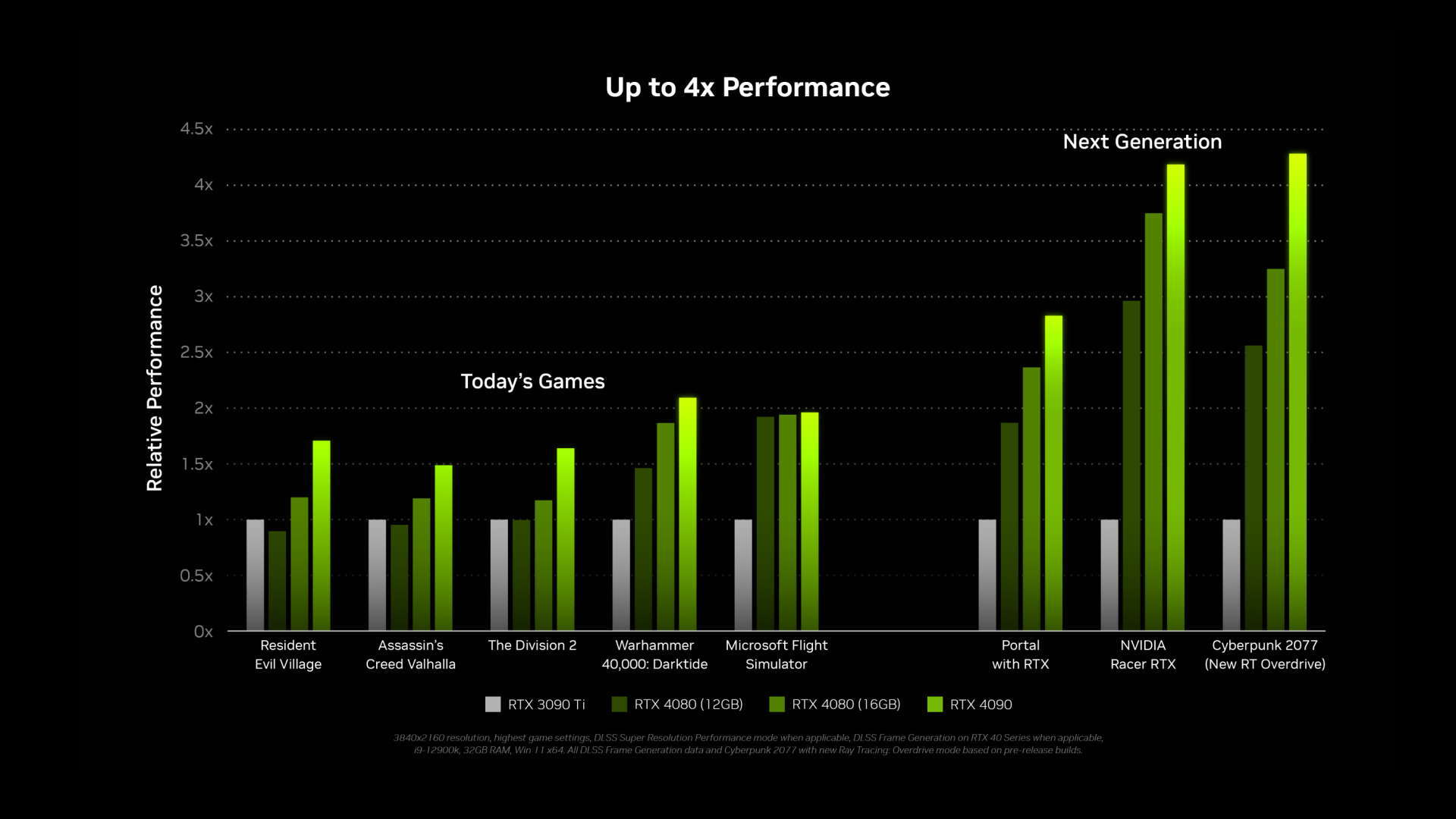

Nvidia has released some internal RTX 4080 benchmark figures to show off its latest architecture, and it certainly makes for some interesting reading. While all of the new Ada Lovelace GPUs enjoy significant increases in what Nvidia calls “Next Generation” games, things aren’t looking so rosy when it comes to today’s games.

The likes of Resident Evil Village, Assassin’s Creed Valhalla, and The Division 2 all appear to show performance drops for the RTX 4080 (12GB) edition and only small increases for the RTX 4080 (16GB) compared to the RTX 3090 Ti. That probably goes some way to explaining why the 12GB card was canned…

This isn’t the absolute home run you might expect for the money, though things are a bit healthier when it comes to Microsoft Flight Simulator and Warhammer 40,000: Darktide, which enjoy an almost doubling of the frame rate. It’s going to be interesting to see how these cards perform in a range of titles when we do get them on the test benches—because while the RTX 4090 clearly has some serious performance chops, it isn’t as clear cut lower down the stack.

Specs

The RTX 4080 uses Nvidia’s Ada Lovelace architecture, boast support for the new 3rd generation Ray tracing cores, 4th generation Tensor cores, and DLSS 3. Nvidia has stuck with the PCIe 4.0 interface for this generation and the cards support Resizable BAR, Microsoft DirectX 12 Ultimate, and Vulkan RT API.

Nvidia will be producing Founder Editions of the GeForce RTX 4080 (16GB) cards, but there will also be a host of AIB-produced versions of the second-tier Ada GPU launching at the same time. Likely with pricing that gets mighty close to the $1,600 reference price of the RTX 4090…

The RTX 4080 is using a completely different GPU to the RTX 4090, and not a cut-down version of the top Ada chip. The AD103 GPU in this 16GB card houses 9,728 CUDA cores, which is considerably less than the AD102 GPU in the RTX 4090 which sports 16,432 CUDA cores.

It’s also running its 16GB of GDDR6X memory on just a 256-bit memory bus, as opposed to the 384-bit bus used by the RTX 3080 of old. How much of a difference that will make will only appear once we get the cards in hands ourselves.

We’ve included the RTX 4080 12GB specs below, just to show what Nvidia was aiming to produce at this $899 level.

| RTX 3080 (10GB) | RTX 3080 (12GB) | RTX 4080 (12GB) | RTX 4080 (16GB) | |

|---|---|---|---|---|

| GPU | GA102-200-KD-A1 | GA102-220-A1 | AD104-400 | AD103-300 |

| CUDA Cores | 8,704 | 8,960 | 7,680 | 9,728 |

| Base Clock | 1,440MHz | 1,260MHz | 2,310MHz | 2,210MHz |

| Boost Clock | 1,710MHz | 1,710MHz | 2,610MHz | 2,510MHz |

| Memory Bus | 320-bit | 384-bit | 192-bit | 256-bit |

| Memory Type | 10GB GDDR6X | 12GB GDDR6X | 12GB GDDR6X | 16GB GDDR6X |

| Memory Speed | 19Gbps | 19Gbps | 21Gbps | 23Gbps |

| Graphics Card Power (W) | 320W | 350W | 285W | 320W |

| Required System power (W) | 750W | 750W | 700W | 750W |

| Launch Price | $699 | $799 | $899 | $1,199 |