Samsung’s new GDDR6W graphics memory doubles performance and capacity

Samsung has announced its next-gen memory tech (opens in new tab) for high performance graphics cards: GDDR6W. Claimed to offer double the capacity and performance of conventional GDDR6 memory, GDDR6W is said to be comparable with HBM2E for outright performance and will enable overall graphics memory bandwidth of 1.4TB/s. For reference, Nvidia’s beastly GeForce RTX 4090 (opens in new tab) currently tops out at 1TB/s using GDDR6X.

Samsung is bigging up the new tech as being key to enabling “immersive metaverse experiences.” (opens in new tab) However, the new tech probably won’t enable more memory bandwidth or faster graphics cards in the short term. But more on that in a moment.

Samsung says the new memory spec boosts per-pin bandwidth to 22Gbps over the maximum 16Gbps spec of GDDR6 (GDDR6X tops out at 21Gbps per pin). However, GDDR6W doubles overall bandwidth per memory chip package from 24Gbps for GDDR6 to 48Gbps, chiefly thanks to doubling the numbers of pins on each memory package.

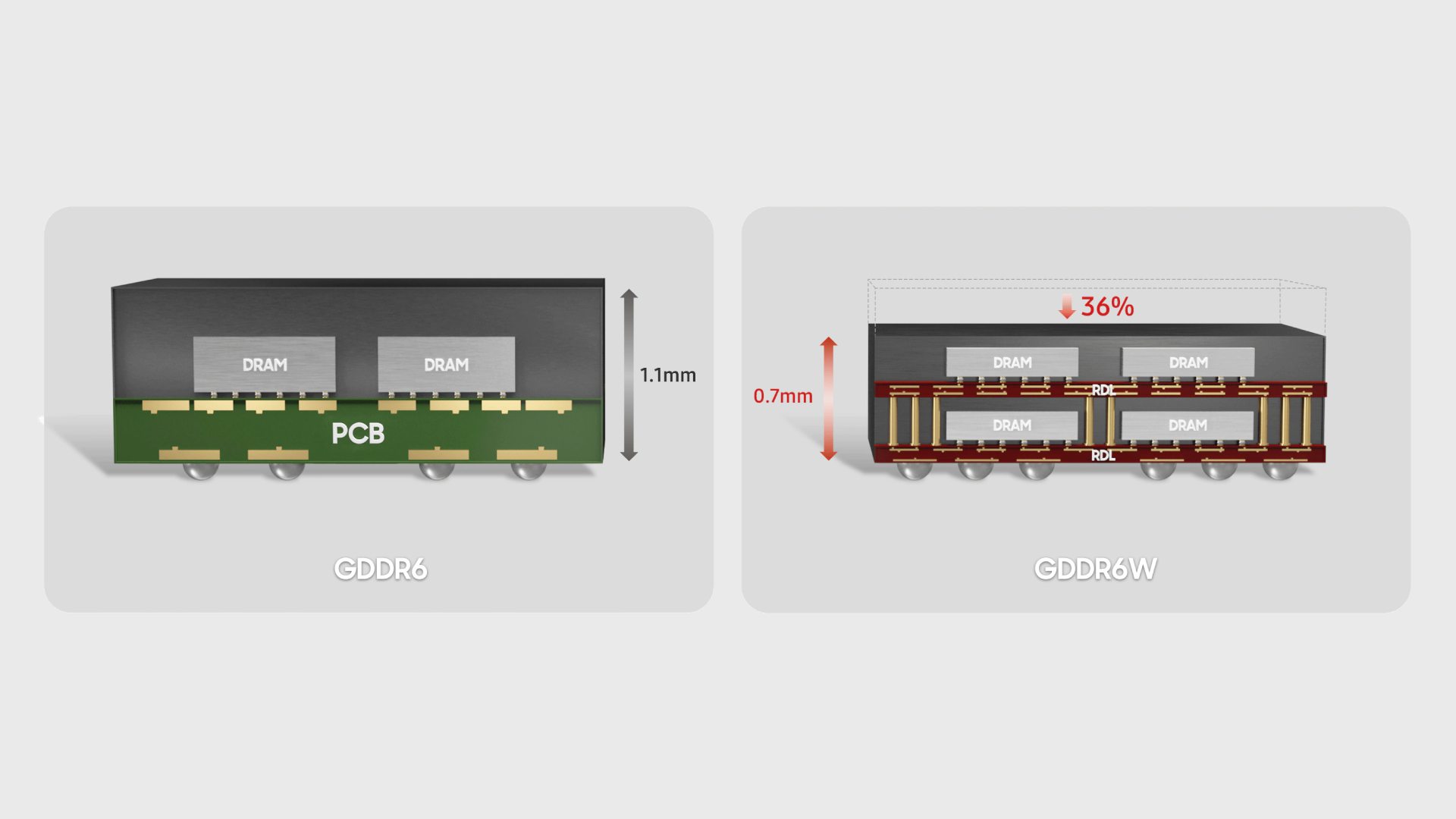

Chip capacity has also doubled from 16Gb to 32Gb. Samsung has achieved all that while maintaining exactly the same physical footprint as GDDR6 and GDDR6X. Perhaps most impressively of all, this has been done courtesy of double stacking memory chips in the package while actually reducing overall package height by 36 percent.

It’s worth noting that the 1.4TB/s overall memory bandwidth claim relates to a 512-bit wide memory interface with eight GDDR6W packages totalling 32GB of total graphics memory. An RTX 4090 24GB card uses 12 GDDR6X packages over a 384-bit bus. Given the same bus width, GDDR6X would only be slightly slower than the new GDDR6W standard.

So, the critical point to note here is that Samsung is making direct performance comparisons with GDDR6 rather than GDDR6X, no doubt because GDDR6X is only produced by Micron.

Where GDDR6W has a clear advantage, however, is in capacity. With double the capacity of both GDDR6 and GDDR6X, only half the number of chips are required for a given amount of total memory, opening up the possibility for graphics cards with even more VRAM.

Using that comparison of the RTX 4090, had the card been based on GDDR6W, it would only have needed six memory chips rather than 12 to achieve the same 24GB capacity and 1TB/s bandwidth.

So, here’s the critical take away: With GDDR6W you get double the performance (actually slightly more than double, thanks to that 22GBps per pin versus 21Gbps for GDDR6X) and double the capacity per memory package. However, for any given capacity, you only need half the number of packages. In the end then actual memory bandwidth available to the GPU is the essentially the same as GDDR6X at any given capacity.

What GDDR6W really offers then is the option of using fewer packages to achieve the same capacity and performance, very likely at lower cost. Or else upping the capacity and performance to levels not yet seen. If you look at a 24GB RTX 4090 board, for instance, there’s very limited space around the GPU for more memory packages.

A 48GB RTX 4090 with double the memory capacity simply wouldn’t be possible with GDDR6X, even if that amount of memory would almost certainly be very silly indeed. The point is that GDDR6W opens up possibilities for the future. GDDR6W also looks particularly interesting for laptops. Fewer memory packages will always be a good thing for mobile.

Nvidia currently favours Micron’s GDDR6X while AMD is sticking with GDDR6 for its latest graphics cards. Neither has indicated any plans to jump on Samsung’s new GDDR6W technology. Indeed, it’s not entirely clear whether any existing GPUs, including Nvidia’s latest RTX 40 Series and AMD’s new Radeon RX 7000 (opens in new tab), support GDDR6W. However, we think support is likely given GDDR6W essentially amounts to a new packaging tech for GDDR6 rather than a new memory tech per se. Watch this space…

Where are the best Cyber Week graphics card deals?

In the US:

In the UK:

Source link